Image credit: [Unsplash]https://unsplash.com/photos/gcgves5H_Ac)

Photo by Markus Spiske on Unsplash

Prerequistes

- R is installed

- RStudio is installed

- download the workshop materials

- open "CPA_2020_data_cleaning_tidyverse.Rproj" to launch Rstudio

- the following packages are installed

install.packages(c("tidyverse", "here","snakecase"))You can learn more about R projects at https://support.rstudio.com/hc/en-us/articles/200526207-Using-Projects.

What is Data Cleaning?

"Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data"

Wikipedia

What is Data Cleaning?

"Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data"

Wikipedia

It is often said that 80% of data analysis is spent on the process of cleaning and preparing the data

Dasu and Johnson, 2003

What is Data Cleaning?

"Data cleansing or data cleaning is the process of detecting and correcting (or removing) corrupt or inaccurate records from a record set, table, or database and refers to identifying incomplete, incorrect, inaccurate or irrelevant parts of the data and then replacing, modifying, or deleting the dirty or coarse data"

Wikipedia

It is often said that 80% of data analysis is spent on the process of cleaning and preparing the data

Dasu and Johnson, 2003

Garbage in, garbage out.

Depends on who you ask, mid 20th century?

Dasu, T., & Johnson, T. (2003). Exploratory data mining and data cleaning (Vol. 479). John Wiley & Sons.

Wu, S. (2013), "A review on coarse warranty data and analysis" (PDF), Reliability Engineering and System, 114: 1–11, doi:10.1016/j.ress.2012.12.021

What is the Tidyverse?

The tidyverse is an opinionated collection of R packages designed for data science. All packages share an underlying design philosophy, grammar, and data structures.

www.tidyverse.org

- There is nothing that can be done using the tidyverse which cannot be accomplished using base R or other packages.

- I prefer these packages because I found a significant leap forward in the robustness of my code, quicker debugging time, and quicker to perform checks on my data.

Further, I recommend the book R for Data science which is freely available online at https://r4ds.had.co.nz/

What is the Tidyverse?

The packages within the tidyverse are categorized into two parts: 8 core packages and 15 non-core packages.

There is a higher level management package called tidyverse which helps maintain the whole collection of packages. All 23 packages can be installed with a single function.

install.packages("tidyverse")

The Tidyverse

The core packages are likely to be used each time you sit down to write code, and loading JUST the core 8 packages can be done with a single function.

library(tidyverse)## -- Attaching packages ------------------------------ tidyverse 1.3.0 --## v ggplot2 3.3.2 v purrr 0.3.4## v tibble 3.0.1 v dplyr 1.0.2## v tidyr 1.1.0 v stringr 1.4.0## v readr 1.3.1 v forcats 0.5.0## -- Conflicts --------------------------------- tidyverse_conflicts() --## x dplyr::filter() masks stats::filter()## x dplyr::group_rows() masks kableExtra::group_rows()## x dplyr::lag() masks stats::lag()

The Tidyverse

The core packages are likely to be used each time you sit down to write code, and loading JUST the core 8 packages can be done with a single function.

library(tidyverse)## -- Attaching packages ------------------------------ tidyverse 1.3.0 --## v ggplot2 3.3.2 v purrr 0.3.4## v tibble 3.0.1 v dplyr 1.0.2## v tidyr 1.1.0 v stringr 1.4.0## v readr 1.3.1 v forcats 0.5.0## -- Conflicts --------------------------------- tidyverse_conflicts() --## x dplyr::filter() masks stats::filter()## x dplyr::group_rows() masks kableExtra::group_rows()## x dplyr::lag() masks stats::lag()A nice thing to note here is that once the tidyverse package is loaded, it explicitly tells you that the core 8 packages are attached. It also supplies a message about which functions are now masked by the newly loaded package functions.

Tip: At anytime, you can run the function tidyverse_conflicts() to see the message again.

Dplyr

Dplyr provides a simple set of “verb” functions that make basic data manipulation easier:

filter()- creates a subset of the data by extracting rows which meet certain criteria

select()- creates a subset of the data by extracting a set of columns we identify by name

mutate()- creates/transforms variables using pre-existing variables and adds them to the end of our tibble

Dplyr

Dplyr provides a simple set of “verb” functions that make basic data manipulation easier:

filter()- creates a subset of the data by extracting rows which meet certain criteria

select()- creates a subset of the data by extracting a set of columns we identify by name

mutate()- creates/transforms variables using pre-existing variables and adds them to the end of our tibble

group_by()- adds grouping meta-data to the data

Dplyr

Dplyr provides a simple set of “verb” functions that make basic data manipulation easier:

filter()- creates a subset of the data by extracting rows which meet certain criteria

select()- creates a subset of the data by extracting a set of columns we identify by name

mutate()- creates/transforms variables using pre-existing variables and adds them to the end of our tibble

group_by()- adds grouping meta-data to the data

summarise()- applies summary functions to columns in our data and returns a tibble of the results

Dplyr

This link will download the cheat sheet:

https://www.rstudio.org/links/data_transformation_cheat_sheet

This link has all of the help documentation and listing of available funcions:

https://www.rdocumentation.org/packages/dplyr/versions/0.7.8

Magrittr

The pipe operator %>% is a very useful way of writing human-readable code, while avoiding intermediate objects within R to hold results.

This operator can be read as the phrase "and then...". It takes the results from the left side of the operator and inserts it as the FIRST argument of the right side of the operator.

# original function callf(x,y)# becomesx %>% f(y)# original function callfilter(mtcars, cyl == 4)# becomesmtcars %>% filter(cyl == 4)Magrittr

The pipe operator %>% is a very useful way of writing human-readable code, while avoiding intermediate objects within R to hold results.

This operator can be read as the phrase "and then...". It takes the results from the left side of the operator and inserts it as the FIRST argument of the right side of the operator.

# original function callf(x,y)# becomesx %>% f(y)Tab completion for variable names

Can chain as many functions together as you would like. Though if the chain grows to more than a sequence of 10, it is recommended to save the results as an intermediate object and begin a new chain.

If you need to pipe the leftside of the operator into another argument, use the . placholder

Data Cleaning/Cleansing

Data Quality can be broken down into a few categories:

- Validity

- In the sense of either valid or invalid, and not the degree to which a measurement corresponds to a true (possibly unknown) value.

- What is your first name? 3 would not be a valid response

- In the sense of either valid or invalid, and not the degree to which a measurement corresponds to a true (possibly unknown) value.

- Accuracy

- This is what psychologists typically refer to as validity or closeness of a value to a known standard or true value.

Data Cleaning/Cleansing

Data Quality can be broken down into a few categories:

- Validity

- In the sense of either valid or invalid, and not the degree to which a measurement corresponds to a true (possibly unknown) value.

- What is your first name? 3 would not be a valid response

- In the sense of either valid or invalid, and not the degree to which a measurement corresponds to a true (possibly unknown) value.

- Accuracy

- This is what psychologists typically refer to as validity or closeness of a value to a known standard or true value.

- Completeness

- The degree to which all required measures are known.

- For us, this pertains to missing data

Data Cleaning/Cleansing

Data Quality can be broken down into a few categories:

- Validity

- In the sense of either valid or invalid, and not the degree to which a measurement corresponds to a true (possibly unknown) value.

- What is your first name? 3 would not be a valid response

- In the sense of either valid or invalid, and not the degree to which a measurement corresponds to a true (possibly unknown) value.

- Accuracy

- This is what psychologists typically refer to as validity or closeness of a value to a known standard or true value.

- Completeness

- The degree to which all required measures are known.

- For us, this pertains to missing data

- Consistency

- Pertains to incompatabilities within the data itself or as can be corroborated with other existing data

Data Cleaning/Cleansing

Data Quality can be broken down into a few categories:

- Validity

- In the sense of either valid or invalid, and not the degree to which a measurement corresponds to a true (possibly unknown) value.

- What is your first name? 3 would not be a valid response

- In the sense of either valid or invalid, and not the degree to which a measurement corresponds to a true (possibly unknown) value.

- Accuracy

- This is what psychologists typically refer to as validity or closeness of a value to a known standard or true value.

- Completeness

- The degree to which all required measures are known.

- For us, this pertains to missing data

- Consistency

- Pertains to incompatabilities within the data itself or as can be corroborated with other existing data

- Uniformity

- Are all the values within a set of measures specified using the same units of measurement (inches vs cm)

Helpful blog which provides a great summary and description of data cleaning https://towardsdatascience.com/the-ultimate-guide-to-data-cleaning-3969843991d4

Wikipedia entry which the blog was based upon https://en.wikipedia.org/wiki/Data_cleansing

Data-Profiling

Data-profiling is a technique for getting to know data at a deeper level. It consists of a series questions and checks we perform as we explore the data and see whether certain constraints were met.

We can do this using descriptive statistics and/or visualizations.

We will use both!

Data cleaning and profiling is an iterative process

- We won't get everything right the first time around

- We might improve or find a better way

- There isn't always a clear place to start, but we have to start somewhere

Data-Profiling: Goal

Identify problems/issues present within a dataset

- Fix it!

- Refer to original material or codebook to help resolve the problem.

- Contact participants to verify response (not usually possible).

- Infer correct values.

- Typos might seem harmless, but there could be ambiguous problems: "malle" or "fmale"

Data-Profiling: Goal

Identify problems/issues present within a dataset

- Fix it!

- Refer to original material or codebook to help resolve the problem.

- Contact participants to verify response (not usually possible).

- Infer correct values.

- Typos might seem harmless, but there could be ambiguous problems: "malle" or "fmale"

- Ignore it!

- We flag a value as missing (

NA) so that R treats it appropriately.

- We flag a value as missing (

Data-Profiling: Goal

Identify problems/issues present within a dataset

- Fix it!

- Refer to original material or codebook to help resolve the problem.

- Contact participants to verify response (not usually possible).

- Infer correct values.

- Typos might seem harmless, but there could be ambiguous problems: "malle" or "fmale"

- Ignore it!

- We flag a value as missing (

NA) so that R treats it appropriately.

- We flag a value as missing (

- We impute a sensible guess as to what the value could be.

- Section 3 of this workshop will walkthrough profiling a dataset for missingness, but not imputation methods (I do recommend using the mice package)

- Fix it!

- Refer to original material or codebook to help resolve the problem.

- Contact participants to verify response (not usually possible).

- Infer correct values.

- Typos might seem harmless, but there could be ambiguous problems: "malle" or "fmale"

- Ignore it!

- We flag a value as missing (

NA) so that R treats it appropriately.

- We impute a sensible guess as to what the value could be.

- This will be treated in full later in this workshop.

Each time we fix something we will update our "cleaning_script.R" file We are going to use a clean-as-we-go strategy

- CAUTION: be very careful of any corrections you make to a data set. It is recommended to keep an unchanged copy of the original data at a separate location.

Data-Profiling: Goal

A problem that often occurs at this point is understanding what to look for within our data.

What constitutes a problem that needs to be addressed?

How do we know that we found them all?

Our goal is to have our data be as error-free and internally consistent as possible.

We have to start somewhere, so generally I start looking at numerical data first, and the categorical data after (we might also want to look at both types simultaneously too).

Data-Profiling: Numerical Data

Different types of data require us to ask different questions.

- Is a variable meant to be a unique identifier?

- Like a participant ID

- Is there a valid range?

- (upper or lower boundaries)

- Can there be decimals?

- (or what level of precision)

- Do the values make "sense" regarding the scale of measurement?

- e.g., A height of 6' seems plausible, but a height of 6 meters does not.

Data-Profiling: Numerical Data

Different types of data require us to ask different questions.

- Is a variable meant to be a unique identifier?

- Like a participant ID

- Is there a valid range?

- (upper or lower boundaries)

- Can there be decimals?

- (or what level of precision)

- Do the values make "sense" regarding the scale of measurement?

- e.g., A height of 6' seems plausible, but a height of 6 meters does not.

- Were missing values coded properly?

Let's Profile Some Data

The dataset we will use in this section of the workshop is a publicly available dataset.

I believe it serves a few purposes for us:

- You might not have substantive knowledge of superheros, but the principles and techniques we discuss can be helpful anyway.

- This puts a stronger emphasis on the data-profiling aspect of the cleaning process

- Sometimes we might be "too" familiar with our data and miss things

Importing Data

data_superhero <- read_csv(file = here("data", "heroes_information.csv"))## Warning: Missing column names filled in: 'X1' [1]## Parsed with column specification:## cols(## X1 = col_double(),## name = col_character(),## Gender = col_character(),## `Eye color` = col_character(),## Race = col_character(),## `Hair color` = col_character(),## Height = col_double(),## Publisher = col_character(),## `Skin color` = col_character(),## Alignment = col_character(),## Weight = col_double()## )

Importing Data

data_superhero <- read_csv(file = here("data", "heroes_information.csv"))## Warning: Missing column names filled in: 'X1' [1]## Parsed with column specification:## cols(## X1 = col_double(),## name = col_character(),## Gender = col_character(),## `Eye color` = col_character(),## Race = col_character(),## `Hair color` = col_character(),## Height = col_double(),## Publisher = col_character(),## `Skin color` = col_character(),## Alignment = col_character(),## Weight = col_double()## )spec(data_superhero)

Importing Data

data_superhero <- read_csv(file = here("data", "heroes_information.csv"))## Warning: Missing column names filled in: 'X1' [1]## Parsed with column specification:## cols(## X1 = col_double(),## name = col_character(),## Gender = col_character(),## `Eye color` = col_character(),## Race = col_character(),## `Hair color` = col_character(),## Height = col_double(),## Publisher = col_character(),## `Skin color` = col_character(),## Alignment = col_character(),## Weight = col_double()## )spec(data_superhero)

I prefer the readr package when importing "flat" data. It provides instant feedback on the data-constraints imposed for each column, displays a progress bar for long import times, and provides feedback on the type constraints imposed on your data.

Note that many tidyverse functions replace base R functions by substituting a '_' in place of a "."

Notice that we get a read-out of how R parsed the data it found within the csv file.

We also get warnings of some other problems.

I prefer snake_case because it is human-readable and machine readable too!!!!!

X1?

Let's take a peak at the mysterious variable X1

glimpse(data_superhero)## Rows: 734## Columns: 11## $ X1 <dbl> 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, ...## $ name <chr> "A-Bomb", "Abe Sapien", "Abin Sur", "Abomination", "Ab...## $ Gender <chr> "Male", "Male", "Male", "Male", "Male", "Male", "Male"...## $ `Eye color` <chr> "yellow", "blue", "blue", "green", "blue", "blue", "bl...## $ Race <chr> "Human", "Icthyo Sapien", "Ungaran", "Human / Radiatio...## $ `Hair color` <chr> "No Hair", "No Hair", "No Hair", "No Hair", "Black", "...## $ Height <dbl> 203, 191, 185, 203, -99, 193, -99, 185, 173, 178, 191,...## $ Publisher <chr> "Marvel Comics", "Dark Horse Comics", "DC Comics", "Ma...## $ `Skin color` <chr> "-", "blue", "red", "-", "-", "-", "-", "-", "-", "-",...## $ Alignment <chr> "good", "good", "good", "bad", "bad", "bad", "good", "...## $ Weight <dbl> 441, 65, 90, 441, -99, 122, -99, 88, 61, 81, 104, 108,...

X1?

Let's take a peak at the mysterious variable X1

glimpse(data_superhero)## Rows: 734## Columns: 11## $ X1 <dbl> 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, ...## $ name <chr> "A-Bomb", "Abe Sapien", "Abin Sur", "Abomination", "Ab...## $ Gender <chr> "Male", "Male", "Male", "Male", "Male", "Male", "Male"...## $ `Eye color` <chr> "yellow", "blue", "blue", "green", "blue", "blue", "bl...## $ Race <chr> "Human", "Icthyo Sapien", "Ungaran", "Human / Radiatio...## $ `Hair color` <chr> "No Hair", "No Hair", "No Hair", "No Hair", "Black", "...## $ Height <dbl> 203, 191, 185, 203, -99, 193, -99, 185, 173, 178, 191,...## $ Publisher <chr> "Marvel Comics", "Dark Horse Comics", "DC Comics", "Ma...## $ `Skin color` <chr> "-", "blue", "red", "-", "-", "-", "-", "-", "-", "-",...## $ Alignment <chr> "good", "good", "good", "bad", "bad", "bad", "good", "...## $ Weight <dbl> 441, 65, 90, 441, -99, 122, -99, 88, 61, 81, 104, 108,...n_distinct(data_superhero$X1)## [1] 734Unique Constraints

A unique constraint is a restriction imposed on a variable to allow for a particular observation unit to be identifiable and distinct from all other observational units.

In other words each value in this variable MUST be associated with one (and only one) observation.

One of the main uses of this type of constraint is unique identifiers for each subject of analysis to prevent spill-over or contamination.

Unique Constraints

A unique constraint is a restriction imposed on a variable to allow for a particular observation unit to be identifiable and distinct from all other observational units.

In other words each value in this variable MUST be associated with one (and only one) observation.

One of the main uses of this type of constraint is unique identifiers for each subject of analysis to prevent spill-over or contamination.

For instance, each subject should have their own ID such that all data are properly attributed to that subject.

Same goes for structurally nested data like education data where students are studied within classrooms, with schools. Each individual subject should be have a unique identifier, each classroom, and each school.

Some functions within R might require such a variable in order to run an analysis (such as repeated-measures, or longitudinal analyses).

They can also be immensely useful for merging data sources together (more on this later).

Pro-tip: Unique Identifiers

If the data set does NOT have a unique identifier for each row (or these identifiers are stored as row names), then I highly recommend you create one.

data_superhero %>% rownames_to_column(var = "id")# ordata_superhero %>% add_column(id = 1:nrow(data_superhero), .before = 1)I prefer the first because row names in R are already constrained to be unique.

Pro-tip: Naming Conventions

Whenever you are creating a name for something in R. BE CLEAR AND DESCRIPTIVE.

I highly recommend following a style guide.

https://google.github.io/styleguide/Rguide.html

I use snake_case because I find it to easier to read, and it has the added benefit of being machine readable

# which do you prefervariable1variable_1anxiety_scale_item_1 # snake_caseanxietyscaleitem1anxietyScaleItem1 # camelCaseImporting Data: Consistent Variable Names

# Import data using a relative file pathdata_superhero <- read_csv(file = here("data", "heroes_information.csv")) %>% # rename the first column as "id" rename("id" = X1) %>% # rename variables to make them easier to use rename_with(.fn = snakecase::to_snake_case)# check that names were fixed properlycolnames(data_superhero)## [1] "id" "name" "gender" "eye_color" "race" ## [6] "hair_color" "height" "publisher" "skin_color" "alignment" ## [11] "weight"Importing Data: Consistent Variable Names

# Import data using a relative file pathdata_superhero <- read_csv(file = here("data", "heroes_information.csv")) %>% # rename the first column as "id" rename("id" = X1) %>% # rename variables to make them easier to use rename_with(.fn = snakecase::to_snake_case)# check that names were fixed properlycolnames(data_superhero)## [1] "id" "name" "gender" "eye_color" "race" ## [6] "hair_color" "height" "publisher" "skin_color" "alignment" ## [11] "weight"

Importing Data: Data-Type Constraints

Data-type constraints is next thing I check once I load data into R.

The readr package uses the first 1000 rows in a dataset to guess the most appropriate atomic type for each column in your dataset (they can be changed later):

- character

- "hello world"

- double

- 3.1459

- integer

- 4L

- logical

- TRUE, FALSE

A data.frame/tibble can be thought of as a way of storing your data which retains important structural information. Each row is a single observational unit, and each column tracks some attribute (quantitative or qualitative) about that observational unit. For instance, each row could be a subject within a study, and each column would be a measured/observed quality pertaining to that subject (like first name, student id, etc.).

I got 99 problems(), but Parsing ain't One

If read_csv() encountered parsing problems, it prints a warning to the console.

You can also extract and View() the problems using the following code.

# examine parsing problems in R's data viewerproblems(data_superhero) %>% View()## [1] row col expected actual ## <0 rows> (or 0-length row.names)Data Profiling: Numerical Variables

Now that the variable names have been fixed, we can start profiling our data.

Data cleaning is often an iterative process. You might uncover a problem that needs to be addressed before you finish fixing the problem you started to clean. Let's start by taking a quick peak at our data

glimpse(data_superhero)## Rows: 734## Columns: 11## $ id <dbl> 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16...## $ name <chr> "A-Bomb", "Abe Sapien", "Abin Sur", "Abomination", "Abra...## $ gender <chr> "Male", "Male", "Male", "Male", "Male", "Male", "Male", ...## $ eye_color <chr> "yellow", "blue", "blue", "green", "blue", "blue", "blue...## $ race <chr> "Human", "Icthyo Sapien", "Ungaran", "Human / Radiation"...## $ hair_color <chr> "No Hair", "No Hair", "No Hair", "No Hair", "Black", "No...## $ height <dbl> 203, 191, 185, 203, -99, 193, -99, 185, 173, 178, 191, 1...## $ publisher <chr> "Marvel Comics", "Dark Horse Comics", "DC Comics", "Marv...## $ skin_color <chr> "-", "blue", "red", "-", "-", "-", "-", "-", "-", "-", "...## $ alignment <chr> "good", "good", "good", "bad", "bad", "bad", "good", "go...## $ weight <dbl> 441, 65, 90, 441, -99, 122, -99, 88, 61, 81, 104, 108, 9...Range Constraints

Range constraints require a little more thought. It all depends on knowing your data, and cleaning your data essentially is getting to know your data in greater detail to be able to make informed decisions about problems.

For instance, if you have data which represents counts of things (e.g., number of symptoms, cigarettes smoked within a certain time frame), these can be checked by you.

Likewise, some variables cannot take on negative values as their scale of measurement renders negative values non-sensical (e.g., heights and weights). Even a value of zero might not be a valid value to observe.

Sometimes, variables might have a valid lower boundary, upper boundary, or both.

Example of Lower Boundary Constraints

Check for "out-of-range" heights and weights.

# This checks for any row with EITHER a weight <= OR a height <= 0data_superhero %>% filter(weight <= 0 | height <= 0) %>% View()# This checks for any row with BOTH a weight <= and a height <= 0data_superhero %>% filter(weight <= 0, height <= 0) %>% View()Remember, ALWAYS check that R is answering the question you think you asked

- I am not saving the results from this code into an object

- I just want to see what the results look like

- I am piping the results into the View() function to use R's data viewer to inspect the results

- Getting the correct boolean logic statements can be tricky, so play around. These pipes DON'T affect our data in any way.

Example of Boundary Constraints

Dplyr has a between() function which can be helpful when used in conjunction with the negation operator !

# What if we were to assume that any height larger than 300cm is abnormally large?data_superhero %>% filter(!between(x = height, left = 0, right = 300))## # A tibble: 225 x 11## id name gender eye_color race hair_color height publisher skin_color## <dbl> <chr> <chr> <chr> <chr> <chr> <dbl> <chr> <chr> ## 1 4 Abra~ Male blue Cosm~ Black -99 Marvel C~ - ## 2 6 Adam~ Male blue - Blond -99 NBC - He~ - ## 3 14 Alex~ Male - Human - -99 Wildstorm - ## 4 15 Alex~ Male - - - -99 NBC - He~ - ## 5 18 Alla~ Male - - - -99 Wildstorm - ## 6 21 Ando~ Male - - - -99 NBC - He~ - ## 7 23 Angel Male - Vamp~ - -99 Dark Hor~ - ## 8 26 Ange~ Female - - - -99 Image Co~ - ## 9 32 Anti~ Male - - - -99 Image Co~ - ## 10 35 Aqua~ Male blue - Blond -99 DC Comics - ## # ... with 215 more rows, and 2 more variables: alignment <chr>, weight <dbl>

Example of Boundary Constraints

Dplyr has a between() function which can be helpful when used in conjunction with the negation operator !

# What if we were to assume that any height larger than 300cm is abnormally large?data_superhero %>% filter(!between(x = height, left = 0, right = 300))## # A tibble: 225 x 11## id name gender eye_color race hair_color height publisher skin_color## <dbl> <chr> <chr> <chr> <chr> <chr> <dbl> <chr> <chr> ## 1 4 Abra~ Male blue Cosm~ Black -99 Marvel C~ - ## 2 6 Adam~ Male blue - Blond -99 NBC - He~ - ## 3 14 Alex~ Male - Human - -99 Wildstorm - ## 4 15 Alex~ Male - - - -99 NBC - He~ - ## 5 18 Alla~ Male - - - -99 Wildstorm - ## 6 21 Ando~ Male - - - -99 NBC - He~ - ## 7 23 Angel Male - Vamp~ - -99 Dark Hor~ - ## 8 26 Ange~ Female - - - -99 Image Co~ - ## 9 32 Anti~ Male - - - -99 Image Co~ - ## 10 35 Aqua~ Male blue - Blond -99 DC Comics - ## # ... with 215 more rows, and 2 more variables: alignment <chr>, weight <dbl>We could insert code similar to this into our chain, but I recommend not subsetting out whole rows of code at this point. This is akin to list-wise deletion. Many modeling functions in R will perform listwise deletion automatically (unless you tell it otherwise). Depending on how you want to handle missing our outlying or influential cases, you might need to retain as much of your data as possible.

Range Constraints

p <- data_superhero %>% ggplot(aes(x = height)) + geom_histogram(bins = 20) + geom_vline(aes(xintercept = 0), color = "red")plotly::ggplotly(p)- Start with the data, then ...

- create a canvas with height on the x-axis, then ...

- generate a histogram, then ..

- add a vertical line at my boundary constraint

Range Constraints

p <- data_superhero %>% ggplot(aes(x = weight, y = height)) + geom_point() + geom_hline(yintercept = 0, color = "red") + geom_vline(xintercept = 0, color = "red")plotly::ggplotly(p)- Start with the data, then ...

- create a canvas with height on the y-axis and weight on the x-axis, then ...

- generate a scatterplot, then ..

- add a vertical and horizontal lines at my boundary constraints

We found a problem, now what?

We know that we have cases with negative height and weight, so what should we do about?

# examine negative or zero height valuesdata_superhero %>% filter(height <= 0) %>% count(height)## # A tibble: 1 x 2## height n## <dbl> <int>## 1 -99 217# examine negative or zero weight valuesdata_superhero %>% filter(weight <= 0) %>% count(weight)## # A tibble: 1 x 2## weight n## <dbl> <int>## 1 -99 237

We found a problem, now what?

That suspicious value of -99 was probably a way of encoding missing data, so we should update our cleaning script with two things.

- We insert our

mutate()we just created into our data pipeline (even if the negatives will be gone soon)- Remember to invert the operators to exclude the negative values

- We should convert these values into

NAso that R treats them appropriately.

data_superhero %>% mutate(height = na_if(height, y= -99), weight = na_if(weight, y = -99)) %>% View()

Was the problem fixed?

## # A tibble: 734 x 11## id name gender eye_color race hair_color height publisher skin_color## <dbl> <chr> <chr> <chr> <chr> <chr> <dbl> <chr> <chr> ## 1 0 A-Bo~ Male yellow Human No Hair 203 Marvel C~ - ## 2 1 Abe ~ Male blue Icth~ No Hair 191 Dark Hor~ blue ## 3 2 Abin~ Male blue Unga~ No Hair 185 DC Comics red ## 4 3 Abom~ Male green Huma~ No Hair 203 Marvel C~ - ## 5 4 Abra~ Male blue Cosm~ Black NA Marvel C~ - ## 6 5 Abso~ Male blue Human No Hair 193 Marvel C~ - ## 7 6 Adam~ Male blue - Blond NA NBC - He~ - ## 8 7 Adam~ Male blue Human Blond 185 DC Comics - ## 9 8 Agen~ Female blue - Blond 173 Marvel C~ - ## 10 9 Agen~ Male brown Human Brown 178 Marvel C~ - ## # ... with 724 more rows, and 2 more variables: alignment <chr>, weight <dbl>

Pro-Tip: Multiple Mutates

Mutate if a column meets a particular criterion

data_superhero %>% # replace -99 with NA for all double variables mutate_if(.predicate = is_double, .funs = na_if, y = -99)Mutate at all specific columns

data_superhero %>% # replace -99 with NA for all double variables mutate_at(.vars = vars(weight,height), .funs = na_if, y = -99)

Our Data Pipeline So Far

# Start by importing the data# I'm using the here::here() function to make the file path relative to the project directorydata_superhero <- read_csv(file = here("data", "heroes_information.csv")) %>% # rename the first column as "id" rename("id" = X1) %>% # rename variables to make them easier to use rename_with(.fn = to_snake_case) %>% # replace -99 with NA mutate(height = na_if(height, y= -99), weight = na_if(weight, y = -99))- We imported our data (using a relative path), then ...

- renamed the

X1variable toid, then ... - fixed variable names, then ...

- replaced values of -99 with

NAfor height and weight

Data-Profiling: Categorical Data

What are the possible values/levels?

- e.g., Did your study have 4 conditions?

Are there more groups than you expected?

Should some "groups" be recast as a single group?

- e.g., similarly named categories, typos, or conceptually similar groupings

- What proportion of cases are missing across levels of your factors?

What are the possible values/levels?

Categorical data in R is called a factor, and each factor should have a known and fixed set of levels.

If you know how many levels you have, then we can explicitly coerce one of our character vectors into a factor.

For example, if you designed an experiment with low, medium, and high stress conditions, then you should have a factor with only three levels.

In essence, we are saying that each subject belongs to a particular category.

Prepping Categorical Data

For some categorical data we want to remove accidental groups in data that could be the result of typographical or data-entry errors.

Consider the following chunk of code:

# create example dataundergrad_year <- c("First","Second","Third","second")# convert to a factor without explicitly setting the levelsundergrad_year_fct <- parse_factor(undergrad_year)undergrad_year_fct## [1] First Second Third second## Levels: First Second Third secondWe want to be sure that when we clean up certain variables, we are not losing potentially important aspects of our data.

So, let's take a peak at our data to determine which variables should be cleaned to prevent case-sensitivity related errors.

Checking out our data

Our data set has 8 categorical variables, some of them fall easily into having known and fixed sets of levels (alignment), while others could have quite a few unforeseen levels (gender, eye_color, race, hair_color, publisher, skin_color, and alignment)

I also opted to not alter the name variable (we might lose proper spelling).

glimpse(data_superhero)## Rows: 734## Columns: 11## $ id <dbl> 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16...## $ name <chr> "A-Bomb", "Abe Sapien", "Abin Sur", "Abomination", "Abra...## $ gender <chr> "Male", "Male", "Male", "Male", "Male", "Male", "Male", ...## $ eye_color <chr> "yellow", "blue", "blue", "green", "blue", "blue", "blue...## $ race <chr> "Human", "Icthyo Sapien", "Ungaran", "Human / Radiation"...## $ hair_color <chr> "No Hair", "No Hair", "No Hair", "No Hair", "Black", "No...## $ height <dbl> 203, 191, 185, 203, -99, 193, -99, 185, 173, 178, 191, 1...## $ publisher <chr> "Marvel Comics", "Dark Horse Comics", "DC Comics", "Marv...## $ skin_color <chr> "-", "blue", "red", "-", "-", "-", "-", "-", "-", "-", "...## $ alignment <chr> "good", "good", "good", "bad", "bad", "bad", "good", "go...## $ weight <dbl> 441, 65, 90, 441, -99, 122, -99, 88, 61, 81, 104, 108, 9...I think about this selection process like this, would I want to group my data later on by this variable. Grouping the data by everyone with the name "A-bomb" probably won't help us. But grouping by race, gender, or even skin-color could be helpful for graphs and analyses later on.

Cleaning Character Data

Cleaning up leading and trailing whitespace

# whitespace changes the data" hi" == "hi"## [1] FALSE# case sensitivity matters"Hi" == "hi"## [1] FALSEOne nice thing about R and the readr package, is that it automatically trims the leading and trailing whitespace from your data.

We can clean up our character data using the stringr package.

Cleaning Character Data

Before we clean up character data, we need to carefully consider whether the action we take cleaning could result in a loss of information.

data_superhero %>% mutate_at(.vars = c("gender", "eye_color", "race", "hair_color", "skin_color", "alignment", "publisher"), .funs = str_to_lower) %>% glimpse()## Rows: 734## Columns: 11## $ id <dbl> 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16...## $ name <chr> "A-Bomb", "Abe Sapien", "Abin Sur", "Abomination", "Abra...## $ gender <chr> "male", "male", "male", "male", "male", "male", "male", ...## $ eye_color <chr> "yellow", "blue", "blue", "green", "blue", "blue", "blue...## $ race <chr> "human", "icthyo sapien", "ungaran", "human / radiation"...## $ hair_color <chr> "no hair", "no hair", "no hair", "no hair", "black", "no...## $ height <dbl> 203, 191, 185, 203, -99, 193, -99, 185, 173, 178, 191, 1...## $ publisher <chr> "marvel comics", "dark horse comics", "dc comics", "marv...## $ skin_color <chr> "-", "blue", "red", "-", "-", "-", "-", "-", "-", "-", "...## $ alignment <chr> "good", "good", "good", "bad", "bad", "bad", "good", "go...## $ weight <dbl> 441, 65, 90, 441, -99, 122, -99, 88, 61, 81, 104, 108, 9...

Cleaning Character Data

Before we clean up character data, we need to carefully consider whether the action we take cleaning could result in a loss of information.

data_superhero %>% mutate_at(.vars = c("gender", "eye_color", "race", "hair_color", "skin_color", "alignment", "publisher"), .funs = str_to_lower) %>% glimpse()## Rows: 734## Columns: 11## $ id <dbl> 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16...## $ name <chr> "A-Bomb", "Abe Sapien", "Abin Sur", "Abomination", "Abra...## $ gender <chr> "male", "male", "male", "male", "male", "male", "male", ...## $ eye_color <chr> "yellow", "blue", "blue", "green", "blue", "blue", "blue...## $ race <chr> "human", "icthyo sapien", "ungaran", "human / radiation"...## $ hair_color <chr> "no hair", "no hair", "no hair", "no hair", "black", "no...## $ height <dbl> 203, 191, 185, 203, -99, 193, -99, 185, 173, 178, 191, 1...## $ publisher <chr> "marvel comics", "dark horse comics", "dc comics", "marv...## $ skin_color <chr> "-", "blue", "red", "-", "-", "-", "-", "-", "-", "-", "...## $ alignment <chr> "good", "good", "good", "bad", "bad", "bad", "good", "go...## $ weight <dbl> 441, 65, 90, 441, -99, 122, -99, 88, 61, 81, 104, 108, 9...

Profiling Categorical Data

So far we have cleaned/checked id and opted to leave name, let's continue by taking a peak at gender and alignment.

fct_count(data_superhero$gender)## # A tibble: 3 x 2## f n## <fct> <int>## 1 - 29## 2 female 200## 3 male 505fct_count(data_superhero$alignment)## # A tibble: 4 x 2## f n## <fct> <int>## 1 - 7## 2 bad 207## 3 good 496## 4 neutral 24There is a bizarre category with a value of "-", this probably indicates missing data for the character data within our dataset.

So let's go back to our cleaning script and slightly alter the read_csv() function.

Missing Character Data

The read_csv() function has many additional arguments which can alter how the data is imported into R.

We can automatically insert NA values when it encounters specific character values via the "na" argument.

data_superhero <- read_csv(file = here("data", "heroes_information.csv"), na = c("NA","","-","."))Missing Character Data

The read_csv() function has many additional arguments which can alter how the data is imported into R.

We can automatically insert NA values when it encounters specific character values via the "na" argument.

data_superhero <- read_csv(file = here("data", "heroes_information.csv"), na = c("NA","","-","."))

Explicit Coercion

If you want more control over the coercion of your data into other atomic types or objects, then I recommend building a chain of functions. Testing each as you go along.

I recommend the readr::parse_*() functions for coercion.

data_superhero <- data_superhero %>% mutate(gender_fct = parse_factor(gender, levels = c("female","male")), alignment_fct = parse_factor(alignment, levels = c("good","neutral","bad")))# example of values with no level for them to belong toparse_factor(c("male","m","female"), levels = c("male","female"))## Warning: 1 parsing failure.## row col expected actual## 2 -- value in level set mAs a side, note we will cover what to do if you don't know all of the levels in advance and you want R to figure it out for you.

Reminder: problems()

Keep in mind that problems() comes from the readr package, so it only tracks problems from importing functions using the form readr::read_*() or coercion functions using the form readr::parse_*() from the readr package.

# check the whole dataset for parsing problems arising form importproblems(data_superhero)# check a single variable after using a parse_*() function for exlplicit coercionproblems(data_superhero$gender_fct)

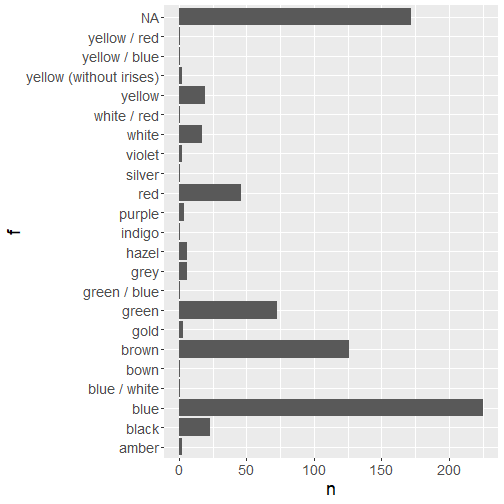

eye_color

Let's keep going to see what else we can discover through profiling our data .

fct_count(data_superhero$eye_color) %>% arrange(f) %>% View()## # A tibble: 23 x 2## f n## <fct> <int>## 1 amber 2## 2 black 23## 3 blue 225## 4 blue / white 1## 5 bown 1## 6 brown 126## 7 gold 3## 8 green 73## 9 green / blue 1## 10 grey 6## # ... with 13 more rowsEdit eye_color

# replace all instances of "bown" eye_color with "brown"data_superhero %>% mutate(eye_color = if_else(condition = eye_color == "bown", true = "brown", false = eye_color)) %>% # Check that there are no cases of "bown" remaining filter(eye_color == "bown")## # A tibble: 0 x 13## # ... with 13 variables: id <dbl>, name <chr>, gender <chr>, eye_color <chr>,## # race <chr>, hair_color <chr>, height <dbl>, publisher <chr>,## # skin_color <chr>, alignment <chr>, weight <dbl>, gender_fct <fct>,## # alignment_fct <fct>- Start with our Cleaned (so far) data, then ...

mutate()theeye_colorvariable, then ...- check each value of eye_color check if our condition is true

- If true, then replace that value with "brown"

- If false, keep the original value of

eye_color

- check each value of eye_color check if our condition is true

- keep only the rows for superheroes with an eye_color exactly equal to "bown"

I have found that frequently, there are many more levels to a factor than I anticipated, so rather than examining a table I prefer to visually assess the levels and their frequencies within my dataset. But the missing data still obscures the lower counts.

Visualize skin_color

p <- fct_count(data_superhero$skin_color) %>% arrange(f) %>% tidyr::drop_na(f) %>% ggplot(aes(x = f, y = n)) + geom_col() + coord_flip() + theme(text = element_text(size = 18))# generate interactive plotplotly::ggplotly(p)Let's try again, but let's insert another function from the tidyr package to drop rows which have a missing level.

Note that the missing data have not been removed from our cleaned data set, there were only omitted for this plot

Edit skin_color

# replace all instances of "gray" skin_color with "grey"data_superhero %>% mutate(skin_color = if_else(condition = skin_color == "gray", true = "grey", false = skin_color)) %>%# Check that there are no cases of "gray" remaining filter(skin_color == "gray")## # A tibble: 0 x 13## # ... with 13 variables: id <dbl>, name <chr>, gender <chr>, eye_color <chr>,## # race <chr>, hair_color <chr>, height <dbl>, publisher <chr>,## # skin_color <chr>, alignment <chr>, weight <dbl>, gender_fct <fct>,## # alignment_fct <fct>Edit skin_color

# replace all instances of "gray" skin_color with "grey"data_superhero %>% mutate(skin_color = if_else(condition = skin_color == "gray", true = "grey", false = skin_color)) %>%# Check that there are no cases of "gray" remaining filter(skin_color == "gray")## # A tibble: 0 x 13## # ... with 13 variables: id <dbl>, name <chr>, gender <chr>, eye_color <chr>,## # race <chr>, hair_color <chr>, height <dbl>, publisher <chr>,## # skin_color <chr>, alignment <chr>, weight <dbl>, gender_fct <fct>,## # alignment_fct <fct>

Structural Missingness

These are values that should be missing in your data set dependent on the structure of our data set.

For instance, imagine a questionnaire which asked for the marital status of a subject, and the subsequent question asked, if you answered "married", then how long have you been married?

If you didn't answer married, then you must have a missing answer here.

survey_data %>% filter(married == TRUE, !is.na(joint_income)) %>% ViewMandatory Constraints

In a nutshell, this pertains to variables in your data which MUST NOT be missing. For instance, subject/participant numbers, and key variables for modelling should all be present.

Constraints can be univariate (like an ID variable) or multivariate (like an ID variable, and multiple time-points)

Take Home Message

- Cleaning your data can still be a time consuming (but important) endeavour.

- Care must be taken at each step to ensure that our data is as error-free as possible

- There isn't a definitive checklist for data quality, but there are some things to look for depending on the type of data you are dealing with.

- Know what to look for takes practice, and the R code presented today should hopefully speed up your data cleaning

- Remember, cleaning can be an iterative process.

- Each error you fix has the potential to uncover another previously obfuscated errors.