Mind the gap:

Assessing current pedagogical approaches to teaching effect size estimation and interpretation in university level psychology statistics classrooms

Mark Christopher Adkins

York University

A bit about me

About myself:

I'm a sixth year Phd student at York University in the Department of Psychology in the Quantitative Methods area. My research interests are loosely about open science practices, statistical pedagogy, Monte Carlo Simulations, effect size estimates, and integrating technology into classrooms. I've taught introduction to using R and the Tidyverse short courses, as well as workshops on data cleaning and preregistration.

Effect Sizes

"the amount of anything of interest"

Cumming & Fidler, 2009

Effect Sizes

"the amount of anything of interest"

Cumming & Fidler, 2009

"some magnitude (or size) of the impact (or effect) of a predictor on an outcome variable"

Pek & Flora, 2018

Effect Sizes

"the amount of anything of interest"

Cumming & Fidler, 2009

"some magnitude (or size) of the impact (or effect) of a predictor on an outcome variable"

Pek & Flora, 2018

"Quantitative reflection of the magnitude of some phenomenon that is used for the purpose of addressing a question of interest"

Kelley & Preacher, 2012

Effect sizes are a measure of the “practical significance” for an effect of interest. In other words, when interpreted through the context of a study does an effect have meaningful, real-world, implications.

Effect sizes can still be hard to grasp, particularly when the name itself invokes notions of a causal relationship. Can we still meaningfully call something an effect size in an observational study? The effect of what?

For better or worse, the causal implications of effect size is not considered in this study

The New Statistics

"recommended practices, including estimation based on effect sizes, confidence intervals, and meta-analysis"

Cumming, 2014

The New Statistics

"recommended practices, including estimation based on effect sizes, confidence intervals, and meta-analysis"

Cumming, 2014

How new are they?

The New Statistics

"recommended practices, including estimation based on effect sizes, confidence intervals, and meta-analysis"

Cumming, 2014

How new are they?

- Effect sizes have their own history dating back to around 1940

- Though their rise to popularity in recent decades has been speculated to be in reaction to Null Hypothesis Statistical Testing (Huberty, 2022; Wilkinsen, 1999), effect sizes are vitally important because they have their own intrinsic and indispensable value for psychological research

Effect Size Usage

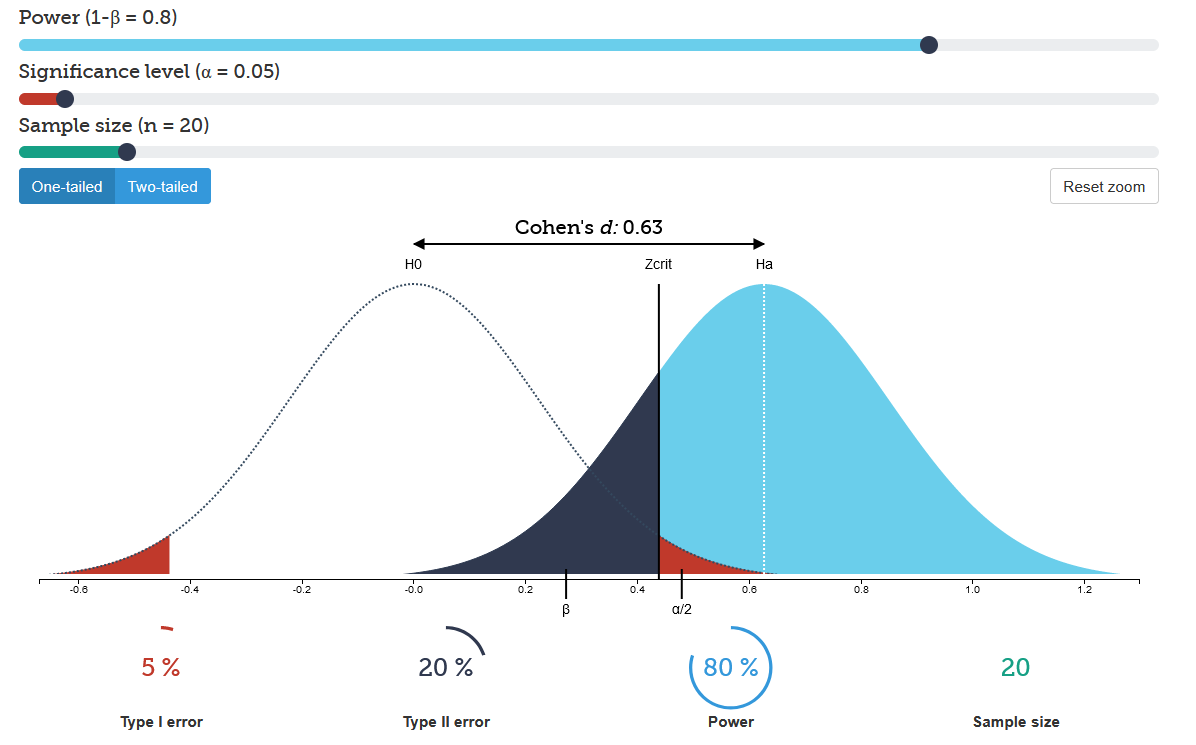

Power Analyses

- Integral part of every power analysis

- Often required by:

- funding bodies

- associations

- journal editors

Sample size Planning

Reporting Practices

aka 'A Multi-Faceted Mess'

Crucial information is often omitted from published studies such as :

- rationale for choosing an effect size

- which variant of an effect size was used

- estimates of uncertainty

Beribisky et al., 2019

Reporting Practices

aka 'A Multi-Faceted Mess'

Crucial information is often omitted from published studies such as :

- rationale for choosing an effect size

- which variant of an effect size was used

- estimates of uncertainty

Beribisky et al., 2019

55.20% of analyses failed to provide justification for effect sizes

Reporting Practices

aka 'A Multi-Faceted Mess'

Crucial information is often omitted from published studies such as :

- rationale for choosing an effect size

- which variant of an effect size was used

- estimates of uncertainty

Beribisky et al., 2019

55.20% of analyses failed to provide justification for effect sizes

34.67% cited prior research as their justification of estimating an effect size

While this method of effect size estimation is perfectly valid in many research contexts, there many other methods/techniques which are under utilized or reported. Regardless of which method a researcher decides is best, there is a lot of room for improvement regarding effect size reporting practices.

Omission of a researcher’s justification can be viewed at best as a space-saving practice, or at worst a failure to fully understand how critical this information is for accurate interpretation of effects within the context of a study.

Rational justification for any data-analytic choices should always be presented.

This is especially true for effect sizes because they provide other researchers with the necessary information to critically evaluate the results of that research.

Transparent and complete reporting of effect size computations and decisions are a crucial part of quantitative research, as it further aids meta-analytic work and increases the reproducibility of the research

Estimation/Interpretation Techniques

Despite the many resources available for computing and interpreting effect sizes, they take a long time (if ever) to make their way into undergraduate and graduate level statistical training

Estimation/Interpretation Techniques

Despite the many resources available for computing and interpreting effect sizes, they take a long time (if ever) to make their way into undergraduate and graduate level statistical training

Evidence indicates that it takes an exorbitant amount of time (if ever) to see changes reflected within statistical curriculum (Cumming et. al, 2007)

Estimation/Interpretation Techniques

Despite the many resources available for computing and interpreting effect sizes, they take a long time (if ever) to make their way into undergraduate and graduate level statistical training

Evidence indicates that it takes an exorbitant amount of time (if ever) to see changes reflected within statistical curriculum (Cumming et. al, 2007)

Estimation/Interpretation Techniques

Cook et al. (2014) conducted a literature review of bio-medical and social science databases and outlined seven techniques.

Estimation/Interpretation Techniques

Cook et al. (2014) conducted a literature review of bio-medical and social science databases and outlined seven techniques.

Simulation based effect size estimation is being considered as the \(8^{th}\) technique for the purposes of this present research because of its growing utility and popularity

I do want to pause for a moment to note that the techniques I will describe in the following slide are not mutually exclusive of each other. That is to say, researchers can use multiple methods to help interpret or estimate effect sizes.

Estimation/Interpretation Techniques

Technique 1:

Anchor Based

Using an anchor or reference value which has known substantive meaning against which to compare the magnitude of differences. E.g., comparing patients who showed at least a certain level of improvement compared to patients who showed no improvement on a specific scale/instrument.

Estimation/Interpretation Techniques

Technique 1:

Anchor Based

Using an anchor or reference value which has known substantive meaning against which to compare the magnitude of differences. E.g., comparing patients who showed at least a certain level of improvement compared to patients who showed no improvement on a specific scale/instrument.

Technique 2:

Distribution Based

Interpreting the size of an effect relative to the precision of the scale used to measure that effect.

Distribution based:

- NHST precision/error, is the mean within 2 SD of the null hypothesized mean

- NHST test themselves

- While strictly speaking, I don't consider a NHST test statistics to be an effect size in an of itself, there is something to be said for using the precision of a test.

- Given that a test statistic is tied closely to the sample size, I would argue that this is not the best use case

Estimation/Interpretation Techniques

Technique 3:

Health-Economic (cost-benefit analysis)

Evaluating the costs involved in increasing an outcome by a certain magnitude. E.g., how much effort should a student exert using new study materials to increase their grade on a test by 5%.

Estimation/Interpretation Techniques

Technique 3:

Health-Economic (cost-benefit analysis)

Evaluating the costs involved in increasing an outcome by a certain magnitude. E.g., how much effort should a student exert using new study materials to increase their grade on a test by 5%.

Technique 4:

Opinion-Seeking (expert opinion)

Consulting with experts within a substantive area to determine important or plausible magnitudes of an effect.

Estimation/Interpretation Techniques

Technique 5:

Pilot-Study

Conducting a smaller, though similar, study to produce an estimate of the effect size in question.

Estimation/Interpretation Techniques

Technique 5:

Pilot-Study

Conducting a smaller, though similar, study to produce an estimate of the effect size in question.

Technique 6:

Review of Evidence Base (literature review or meta-analysis)

Conducting or using a meta-analysis as obtain or interpret an effect size estimate. Conducting a literature review for the same purpose as a meta-analysis.

Estimation/Interpretation Techniques

Technique 7:

Standardized effect sizes

Using published or recommended guidelines of standardized effect sizes to interpret the magnitude of an effect.

Estimation/Interpretation Techniques

Technique 7:

Standardized effect sizes

Using published or recommended guidelines of standardized effect sizes to interpret the magnitude of an effect.

Technique 8:

Simulation based effect sizes

Conducting a simulation to estimate ranges of plausible effect sizes. Simulation results could also be used to establish a reference point for interpreting the magnitude of an effect.

Mind The Gap

Mind The Gap

Is there a problem with statistical training in which little to no time is spent teaching how to estimate, interpret, and report effect sizes?

Photo by Artur Tumasjan on Unsplash

While there appears to be a gap within the literature about understanding and reporting effect sizes, there are no data available pertaining to the existence of a gap within the classroom regarding the estimation and interpretation of effect sizes.

Specifically, I want to assess the prevalence (or lack of) these eight techniques.

- I already discussed reporting practices which would be crude measure of how important aspects regarding effect sizes are missing.

[CLICK]

I want to know why they are missing.

Do editors make them triage their manuscripts and these details fall by the wayside

Or is it a lack of statistical training/emphasis on understanding how to estimate and interpret effect sizes?

Maybe its the case that they simply disagree with statistical experts about the vital importance of fully reporting effect sizes and interpreting them in the context of their study or line of research (attitudinal)

Barrier Related Hurdles

- Are some of these techniques more complicated than others leading to their under-use within a classroom setting?

- Do instructors themselves have sufficient training to teach these techniques?

- Can it also be the case that many instructors (grad students included) overly rely on published textbooks to create their lecture materials which may be out of date in relation to current statistical recommendations?

Photo by Interactive Sports on Unsplash

Are undergraduate teachers omitting this information on the assumption that it is more appropriate for graduate level students?

Can interactive exercises be developed for students to aid their understanding of these techniques?

Are graduate teachers assuming that sufficient basics were instilled in their students that effect size coverage within their own classrooms is redundant and consequently a waste of valuable class time?

Do instructors believe that curricular change is important or even necessary with regard to effect size training?

Or worse yet, are students adequately trained at all levels, but principal investigators or journal editors are influencing poor reporting practices against the overwhelming advice of statistical experts and association bodies?

Present Study

Three Phases

- Data collection

- survey of statistics instructors at Canadian universities

- Design interactive modular activities

- "plug-and-play" to make it easier to integrate into lectures or used for at-home-learning

- reduce instructor training

- Develop R package

- to enable easy, effect-size driven, data generation functions

- in-class demonstrations, homework assignments, exam questions, etc.

Present Study

Currently in phase 1: Data Collection

Recently granted ethical clearance to begin data collection

- Survey was constructed using formr.org

Present Study

At the end of the study, participants will be asked if the want to be contacted to field-test the interactive lessons to provide feedback.

Present Study

At the end of the study, participants will be asked if the want to be contacted to field-test the interactive lessons to provide feedback.

The feedback will be used to improve the lessons which will likely be released/published on the web